How to improve RealTime performance |

How to improve realtime performance |

LinuxCNC runs on Linux using real time extensions.

Realtime extensions allow processes to guarantee strict timing requirements, often down to the tens of microseconds. Support currently exists for version 2.2, 2.4 and 2.6 Linux kernels with real time extensions applied by RT-Linux or RTAI patches.

Because LinuxCNC needs real time extensions it cannot be run with the standard kernel supplied by most Linux distributions. However, a properly patched kernel will be installed automatically when the LinuxCNC .deb package is installed, greatly simplifying this task for the end user. Previously this requirement meant you needed a custom Linux distribution, but now LinuxCNC can be installed on most debian-based distributions.

(stolen shamelessly from the [[RTlinux HOWTO http://www.faqs.org/docs/Linux-HOWTO/RTLinux-HOWTO.html]]

The reasons for the design of RTLinux can be understood by examining the working of the standard Linux kernel. The Linux kernel separates the hardware from the user-level tasks. The kernel uses scheduling algorithms and assigns priority to each task for providing good average performances or throughput. Thus the kernel has the ability to suspend any user-level task, once that task has outrun the time-slice allotted to it by the CPU. This scheduling algorithms along with device drivers, uninterruptible system calls, the use of interrupt disabling and virtual memory operations are sources of unpredictability. That is to say, these sources cause hindrance to the realtime performance of a task.

You might already be familiar with the non-realtime performance, say, when you are listening to the music played using 'mpg123' or any other player. After executing this process for a pre-determined time-slice, the standard Linux kernel could preempt the task and give the CPU to another one (e.g. one that boots up the X server or Netscape). Consequently, the continuity of the music is lost. Thus, in trying to ensure fair distribution of CPU time among all processes, the kernel can prevent other events from occurring.

A realtime kernel should be able to guarantee the timing requirements of the processes under it. The RTLinux kernel accomplishes realtime performances by removing such sources of unpredictability as discussed above. We can consider the RTLinux kernel as sitting between the standard Linux kernel and the hardware. The Linux kernel sees the realtime layer as the actual hardware. Now, the user can both introduce and set priorities to each and every task. The user can achieve correct timing for the processes by deciding on the scheduling algorithms, priorities, frequency of execution etc. The RTLinux kernel assigns lowest priority to the standard Linux kernel. Thus the user-task will be executed in realtime.

The actual realtime performance is obtained by intercepting all hardware interrupts. Only for those interrupts that are related to the RTLinux, the appropriate interrupt service routine is run. All other interrupts are held and passed to the Linux kernel as software interrupts when the RTLinux kernel is idle and then the standard Linux kernel runs. The RTLinux executive is itself nonpreemptible.

Realtime tasks are privileged (that is, they have direct access to hardware), and they do not use virtual memory. Realtime tasks are written as special Linux modules that can be dynamically loaded into memory. The initialization code for a realtime tasks initializes the realtime task structure and informs RTLinux kernel of its deadline, period, and release-time constraints.

RTLinux co-exists along with the Linux kernel since it leaves the Linux kernel untouched. Via a set of relatively simple modifications, it manages to convert the existing Linux kernel into a hard realtime environment without hindering future Linux development.

while true ; do echo "nothing" > /dev/null ; done

I saw some other cache related behavior a long time ago when doing some latency testing. The latency results improved noticeably when I lowered the thread period below some threshold. (I don't remember the threshold period, it was at least a year ago.)

I eventually realized that when I was running the thread very frequently, the RT code never got pushed out of cache. When I increased the period, other processes had enough time to replace the RT code in cache between invocations of the thread.

(*) "cpu hog": a computer program that eats unneeded processor speed on your computer to appear that it is doing more than it actually is, or it's just coded really badly

(hase) I disagree with the assesment: the CPU hog does improve latency, but it has nothing to do with the cache. This is actually a power saving feature in the CPU: when idle, the CPU is put into a power-saving state and it takes some time to wake up from that - hence the latency in reacting to the timer interrupt. The CPU hog running makes sure the CPU is never idle->never sleeps->never requires long wakeup time.

The Intel Core2Duo benefits greatly from the idle=poll parameter to the kernel, which disables the deep-sleep C-State of the CPU. The effect is equal to that of hogging one CPU core.

Some extra info:

If you have a CPU that is C-state capable, add the following line to the GRUB_CMDLINE_LINUX_DEFAULT in /etc/default/grub: "idle=poll" and run update-grub. The "idle=poll" keeps the CPU in a loop checking to see if it is needed rather then it entering C1 waiting for a wakeup call. I've seen latencies go from 30-50us to around 4us (1-2us even) on 6 different systems.

If you have an Intel CPU, you might also want to check the output of the following command:

cat /sys/devices/system/cpu/cpuidle/current_driver

If it says: intel_idle, you have to ALSO add the following line to the GRUB file: "intel_idle.max_cstate=0 processor.max_cstate=0"

The reason for this is that Linux now uses the intel_idle (replaces acpi_idle) driver to control the c-states, this driver ignores BIOS setting regarding C-states. The above line will disable C-states other than C1,

I have not performed any tests on AMD based systems, but I think the "idle=poll" should also work on those.

Example:

You have a Core2Duo E6550

Open a terminal window, and execute the following commands:

sudo -i

cat /sys/devices/system/cpu/cpuidle/current_driver

output: intel_idle

nano /etc/default/grub

edit the line GRUB_CMDLINE_LINUX_DEFAULT="-original parameters-" to GRUB_CMDLINE_LINUX_DEFAULT="-original paramters- intel_idle.max_cstate=0 processor.max_cstate=0 idle=poll"

Save and exit

update-grub

reboot

Afterwards check you latency to see if it has made a difference, do not run the "cpu hog". If it did not, I advise you to revert to your old settings because running the system this way will cause the cpu to run hotter and use more energy.

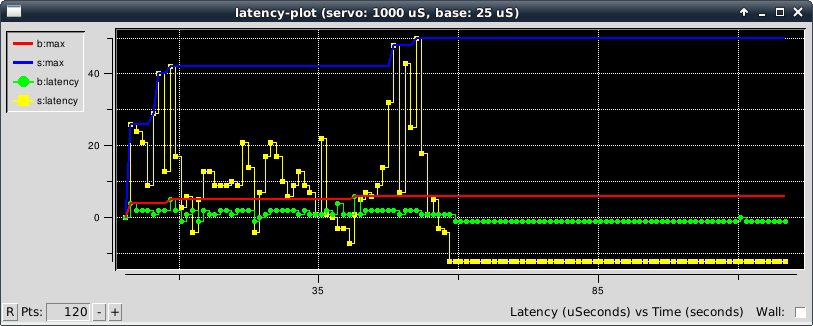

Below is a screenshot showing a latency plot on a quadcore machine running with boot option "isolcpus=1,2,3" but without "idle=poll" or "nohlt". The servo thread latency varies wildly up to tens of microseconds up to around t=60s. At that point I ran the "cpu hog" as described above (while true ; do echo "nothing" > /dev/null ; done) and this caused the latency to settle down. A later reboot with the "idle=poll" option added also seems to solve the problem, thereafter running the cpu hog does not have any noticeable further effect. This information could help diagnose identify when the "idle=poll" option could help. (The same machine showed no noticeable improvement with "nohlt".)